Dark Matter and the Totalitarian Principle

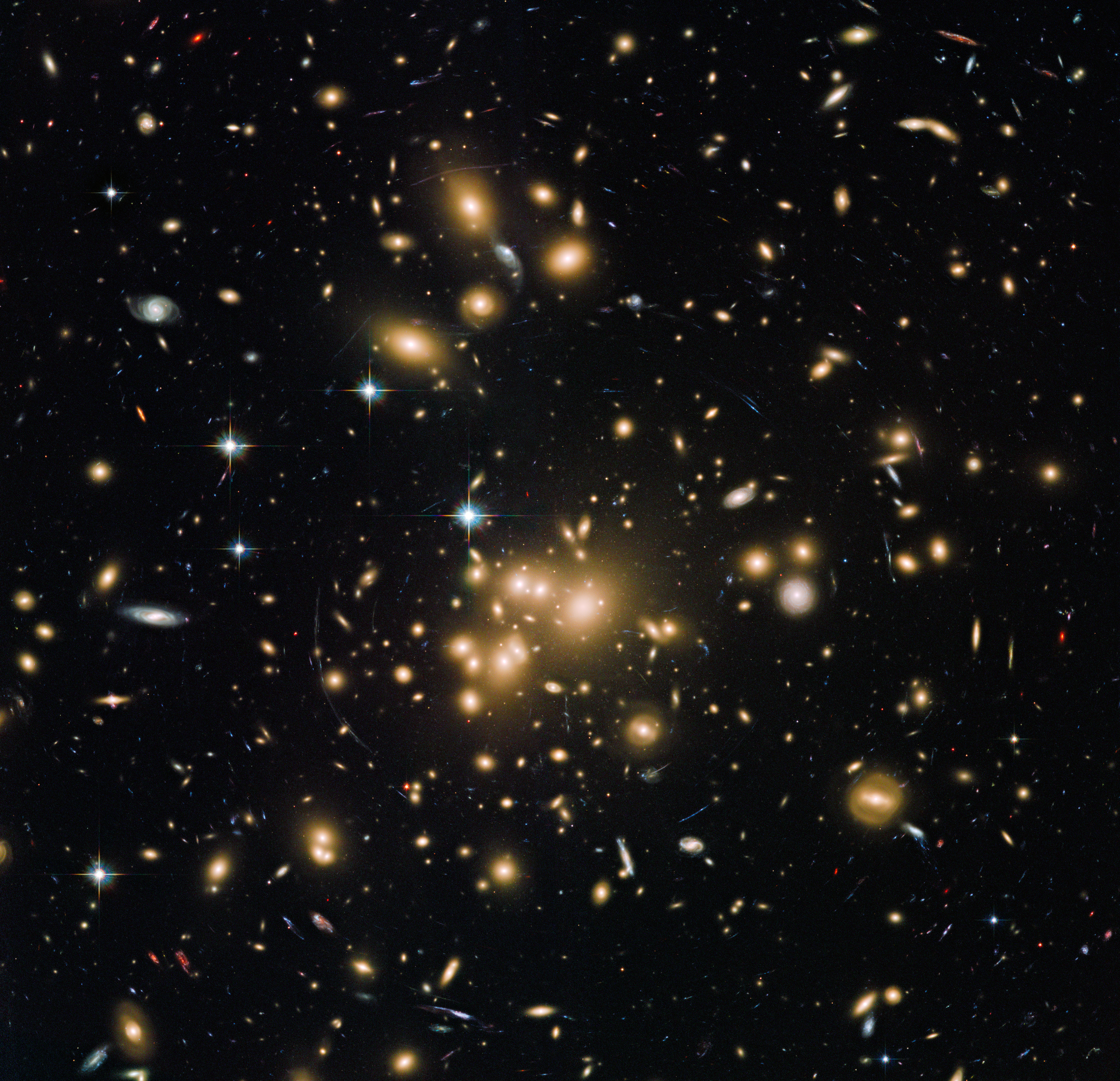

The basic problems with Dark Matter that cosmologists are trying to solve revolve around the fact that the combined gravitational forces of the all the detectable matter in the Universe are not sufficient to prevent the expansion of the Universe from accelerating to beyond the point where gravity as we know it can no longer hold it together.

While much has been written about Dark Matter as the mechanism that holds the Universe together, most sources about this subject that are available to the general public say two basic things, albeit in slightly different terms each time. Essentially, the first thing is that nobody knows what Dark Matter is, but everybody knows it exists because its gravitational effects are visible in the rotation curves of galaxies. The second thing most sources state is that if we are to explain both the distribution of detectable matter in the Universe, and the observed expansion rate of the Universe, which is now constrained to roughly 67.5 km/sec/megaparsec, we need to increase the mass of the Universe by about 27% or so.

We need not rehash any of the above here, beyond saying that the recent discovery of two galaxies, designated NGC1052-DF2 and NGC1052-DF4 respectively, neither of which appeared to contain meaningful amounts of Dark Matter when they were discovered, did nothing more than strengthen the case for the universal existence and even distribution of Dark Matter. It turned out that instead of being located about 60 million light years away, both galaxies are much closer to us than originally estimated by their discovers, and both belong to a class of galaxies known as LSB (Low Surface Brightness) galaxies.

These two facts explain two things about these galaxies. The first is that their seemingly abnormal rotation curves, which initially suggested a lack of Dark Matter in them can be explained by their low masses and sparse stellar populations, and the second is that the distance discrepancy can be explained by their low surface brightness, which is a function of both their distance and sparse stellar populations.

Thus, when these facts are added to the actual distances to NGC1052-DF2 and NGC1052-DF4, which are now estimated to be only about 42 million light years for NGC1052-DF2 and (an expected) similar distance to NGC1052-DF4, the notion that Dark Matter may be unevenly distributed at cosmic scales throughout the Universe can be effectively dispelled.

Despite the above, though, we still don’t know anything about Dark Matter, except that a) it is present in and around every known galaxy, including NGC1052-DF2 and NGC1052-DF4, and b) that Dark Matter appears to be the dominant gravitational force in the Universe. Nonetheless, research into the origin and nature of Dark Matter is continuing apace, and much of modern research into theoretical physics relating to Dark Matter now revolves around the original work of physicists Roberto Peccei and Helen Quinn, who in 1977, proposed a new symmetry to explain some types of interactions between some subatomic particles under some conditions, which brings us to—

The Totalitarian Principle

Coined by physicist Murray Gell-Mann in 1956, this principle holds that “Everything that is not forbidden is compulsory”. While this principle is often misapplied and/or misinterpreted, it can be applied correctly to research into Dark Matter if we take it to mean that unless a specific conservation law (in quantum physics) forbids a specific interaction between particles, then there is a non-zero* probability that the interaction will occur.

Let us put the proposed new symmetry into some perspective. All interactions between quantum particles are predicted, described, and explained by the Standard Model, which is a set of physical and mathematical rules that have been developed over many years to explain the nature of the Universe—or at least, much of the nature of the Universe.

More precisely, though, the Standard Model demands that all interactions are either symmetrical, or asymmetrical, but as with all rules there are exceptions, or more precisely, allowed interactions that have not been observed, and it is one such exception that may hold the key to explaining the nature of Dark Matter, albeit indirectly.

The subject of particle interactions is a hugely complicated one and we need not delve into those complexities here, beyond saying that apart from predicting certain interactions, the Standard Model also allows some reactions, while it disallows others. To do this, the Standard Model uses either commutative or non-commutative mathematical rules to describe various types of interactions, such as the commutative ones below—

- “C,” or Charge Conjugation describes interactions in which particles are replaced with their antimatter counterparts.

- “P,” or Parity describes interactions in which particles are replaced with their mirror-image counterparts

- “T,” or Time Reversal describes interactions in which interactions that move forward in time are replaced with interactions that move backwards in time.

The above interactions are symmetrical in the sense that particles are replaced with their exact opposites, while in non-symmetrical interactions, a given particle may not be replaced by its exact opposite, and this is the basis of the—

Strong CP Problem

Essentially, the Strong CP Problem states that while both symmetrical and non-symmetrical particle interactions occur in electromagnetism, as well as in both the weak and strong nuclear forces (but not in gravity) “C,” “P,” and “T” interactions are individually forbidden in electromagnetism, while in both the weak and strong nuclear forces, the violation of “CPT” interactions in tandem is forbidden.

This is somewhat further complicated by the fact that while the combination of “C” and “P” interactions is allowed in both the weak and strong nuclear forces, these interactions have only been observed in the weak nuclear force. These interactions have never been observed in the strong nuclear force even though they are allowed, which is taken by many researchers to mean that the Standard Model is either incomplete or downright wrong.

So what does this have to do with Dark Matter? Simply this: Peccei and Quinn had proposed a new theory that predicts the existence of a new particle, known as the “axion.” If their theory is correct, the axion will be extraordinarily abundant throughout the Universe; it will have no electrical charge, and will be extremely light, but not without mass.

In practice, the search for axions has been running since 1983 via the Axion Dark Matter Experiment (ADMX), and although no evidence for the existence (or otherwise) of axions has been detected to date, the ongoing experiment has been progressively refining the constraints on both the existence probability and physical properties of axions and other, potential axion-like particles. Nonetheless, the putative axion is the perfect candidate particle to explain Dark Matter, because its proposed/envisioned properties will make it undetectable except through their prodigious combined gravitational effects, which is currently the only way we have to observe and quantify Dark Matter.

As a practical matter, the ongoing Axion Dark Matter Experiment has now developed to the point where much, if not most of the Peccei-Quinn theory has been ruled out. However, the improved constraints on the proposed axion’s properties have created room for either a revised Peccei-Quinn theory or for several alternative theories that could lead to the discovery of a viable candidate Dark Matter particle, which could conceivably both solve the Strong CP Problem, and explain Dark Matter—even if only a minute fraction of Dark Matter is made up of axions or similar particles.

* Note that in this (statistical) context, “non-zero” refers to a probability of the interaction occurring that is greater than zero, and not to a zero chance of it ever happening.